Fixes for the “Broken” Nursing Home Star Ratings Part 3: Quality

02 Jun

Welcome to the third installment of our four-part series on improving the CMS Five-Star rating system for nursing homes. This month, we move from Staffing to Quality, the last of the three domains.

Why Is Quality Last?

The fact that Quality is last is the perfect way to start. Isn’t in strange that patient outcomes, ostensibly the entire reason for the existence of the SNF, is considered last in the calculation of the Overall Five-Star Rating? Survey sets the bar, and the Staffing and Quality can only raise or lower it by one peg. While a ‘bonus’ star can be earned for either a 4 or a 5 in Staffing (assuming it’s higher than Survey), only a perfect 5-star rating in Quality can boost a facility. And that’s only if they aren’t already five stars! A facility that’s 4 in Survey and 5 in Staffing doesn’t need to bother striving for 5 in Quality. If a facility is 1 star in Survey or has earned the “Red Hand” for abuse, they’re limited to one Bonus star. So again, if they’ve earned it for Staffing, there’s no incentive to boost Quality. We understand that CMS wants to punish poor-performing facilities. It’s just a shame that it has to come at the expense of the domain that seems to matter most to patients – the outcomes of their care.

A Missing Element: The Patient’s Perspective

While we’re at it, has anyone other than family and lawyers bothered to ask the residents about their experiences? Reviews are embedded into every area of our lives now, but the Five-Star system surprisingly has no measure of patient satisfaction. 15 clinical outcomes are considered, and the closest is probably successful discharge to community. Sadly, all this measure does is check that the patient wasn’t readmitted and didn’t die. So a measure of patient satisfaction isn’t in place today, but it is at least on on CMS’s radar. Buried on page 167 of a recent lengthy interim rule is a mention of a patient-reported measure called PROMIS being considered for inclusion in the VBP program. But in our experience, “under consideration” is another word for “at least five years out”.

Stalled Progress and Issues with Inflation

So CMS moves slowly and evaluates any changes to the Quality Measures thoroughly before implementation. But despite the often lengthy evaluation periods, CMS had been doing a good job of keeping things updated pre-Covid. A brief timeline:

- Eliminated a measure, long-stay residents who were physically restrained, which was very close to zero nationwide

- Added a measure, long-stay emergency department transfers, matching the short-stay measure

- Changed the measures short-stay pressure ulcers and successful discharge to community to match with the QRP measures for greater consistency

- Adjusted the QM cutpoints to address ratings inflation and encourage further improvements

- Eliminated both Pain measures

- Introduced separate high-level ratings for short and long stay to help families better understand a home’s performance in the type of care their family member will receive

- Announced a plan to adjust the QM cutpoints every six months starting in April 2020

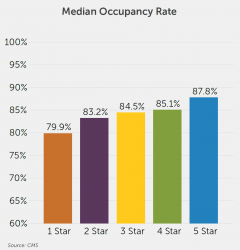

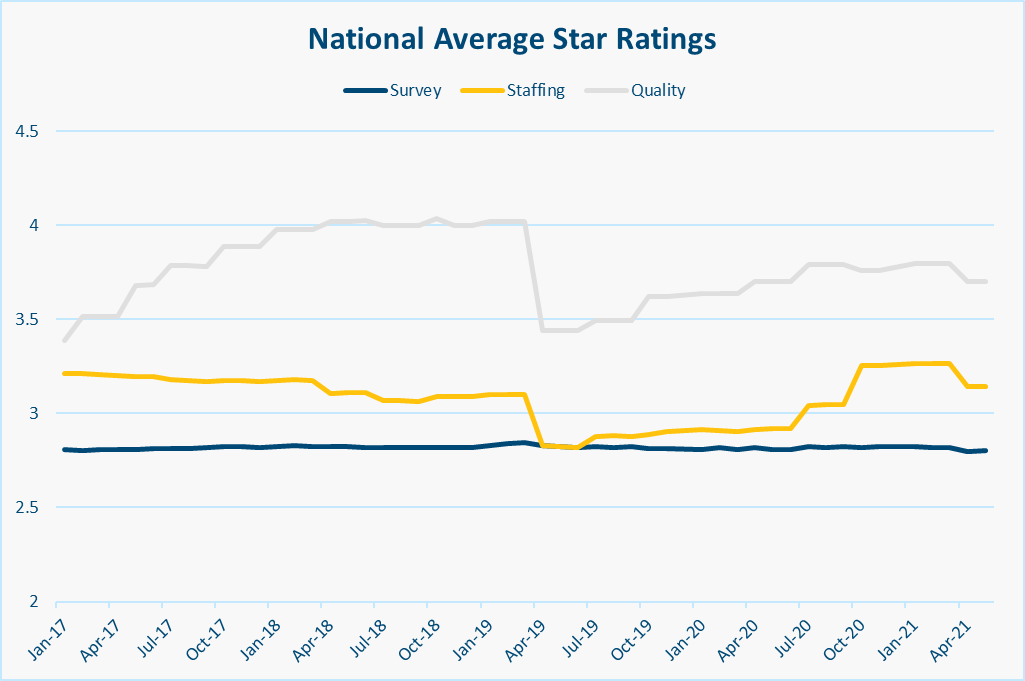

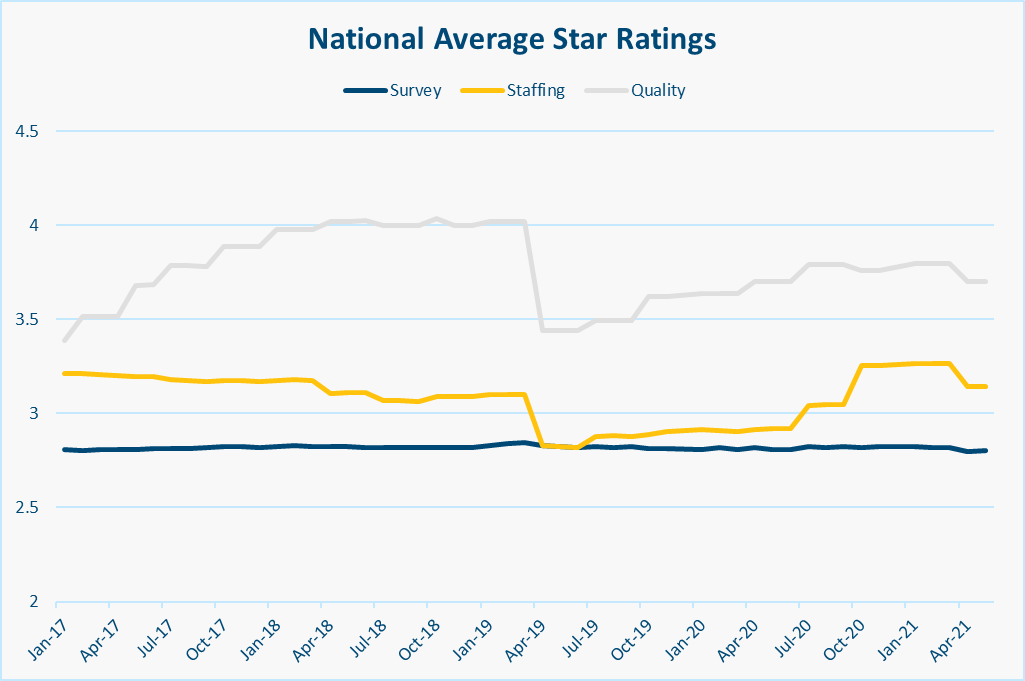

The last point from each memo is particularly important, because the national average Quality Star Rating is much higher than the other domains:

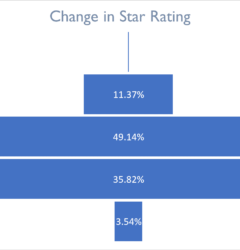

The April 2019 shift in cutpoints was designed to reduce the inflated QM scores, and it did! Unfortunately, CMS didn’t hold to their plans and the QM cutpoints are still the same today as they were in April 2019. By their own schedule, CMS has missed 3 of these adjustments, and you can see the slow creep back up towards a nationwide average of 4 stars. Obviously Covid-19 threw a wrench in the works, both in terms of the actual measures and the implementation of the plan to adjust their cutpoints. But again, CMS needs to pick up where they left off addressing inflation and continue to raise the bar by adjusting the cutpoints.

Is Underreporting an Issue?

Aside from five-star, there issue with inflation may be deeper than just the cutpoints. The New York Times piece and the studies they cite infer clearly that there are issues with underreporting in the Quality Measures. While this is troubling if true, there are issues with both studies. Underreporting falls with major injury is a serious concern, but there are issues baked right into the measure’s title. How severe must an injury be to be considered “major?” To hear a plaintiff’s attorney tell it, a scratch or a bruise is “major” enough for a seven-figure settlement, let alone a “ding” in one of 15 measures on the last Five-Star domain to be considered. Steven Littlehale already addressed several concerns with the second study. The upshot here is that no self-reported system is perfect, but the MDS is an extremely effective vehicle for recording patient assessments. Staff are trained hard on how to fill them out right, and while there’s certainly room for improvement, they’re still the best window we have into how the residents are doing. So we’re left where we always are, and have to resort to recommending audits. While Nursing Home operators and nurses cringe at the continued scrutiny, an audit of their MDS filings provides a prime opportunity to build faith in their accuracy.

A Quagmire of Complexity

For the sake of this last section, we’re going beyond the 15 measures used in Five-Star and our issues here are how complicated the measures are, and how many of them are tracked. To start with, most have absurdly long names like “Application of Percent of Long-Term Care Hospital (LTCH) Patients With an Admission and Discharge Functional Assessment and a Care Plan That Addresses Function”. CMS tries to simplify this on Care Compare, but we’re still left with “Percentage of SNF residents whose functional abilities were assessed and functional goals were included in their treatment plan”. Not much better to the layman.

Then we have the measure attributes. Short stay vs. Long stay makes sense, but taking it one step further results in more confusion. MDS-based measures cover a different time period from claims-based measures. Risk adjustment is a smart idea to give facilities a fair shake based on acuity, but try explaining to a regular person what a covariate is, and then, for extra fun, try explaining why the QRP measure Change in Self-Care Score has SIXTY-TWO of them. Some measures are percentages, others are just numbers. In some cases, it looks like CMS has given up and just resorted to “better than” or “worse than the national average”. Not to mention the fact that for some measures, higher numbers are better and for others, lower is best. CMS has tried to address this with explanatory text on Care Compare, but I’m sure people are still confused. There’s just too much interpretation to be done on all sides.

Lastly, different measures are used for different things, and Care Compare has become kind of a blank dumping ground for them all. A quick canter through the datasets powering Care Compare turns up:

- 18 MDS-based measures

- 4 Claims-based (but non-QRP) measures

- 13 QRP measures

- 1 VBP measure

This totals 36 measures, and that doesn’t count QRP APU Threshold, which attempts to ensure that facilities submit the correct information required for QRP measures. Only 15 measures are used in Five-Star, and aside from the APU, only 1 measure affects reimbursement via VBP. I’m confused even just writing this. How can we expect everyday people to use this information when there’s so much of it?

Wrapping Up

We’ve covered a lot of ground here. Let’s summarize with a few concrete recommendations for how we think CMS could improve:

- Raise the profile of the Quality Rating. There’s no reason it should be the last thing considered.

- Re-commit to constant improvement. Avoid quality rating inflation by constantly raising the bar and shifting cutpoints. And don’t forget to incorporate patient satisfaction

- Bolster trust by conducting audits. Self-reporting via MDS is great, but things happen. Let’s double-check every now and then.

- Simplify, simplify. Having 36 QMs in 5 categories with super long names is a pain for DONs and administrators to track and improve, and it’s unfair to ask the public to understand. Trim, re-focus and add context.

If precedent is any indication, it’ll be a while before any of this happens. But here’s hoping. Use the form below to subscribe to our newsletter and we’ll notify you next month as we wrap up this series and consider the future of the Five-Star program.

Related Posts

Stay In Touch

Sign up for our newsletter to get the latest updates, insights and analysis from the StarPRO team